It is commonly believed that music and language are processed independently in the brain, given for instance classic findings suggesting more left-lateralization for language processing and right lateralization for music processing.

This has led to theories that music and language constitute independent cognitive modules. At the same time, spoken language and song rely on the same perceptual and motor systems, suggesting that there may be some sharing of information.

Research in the Auditory Perception & Action Lab addresses how people produce, perceive, and imitate pitch/time patterns from song and speech.

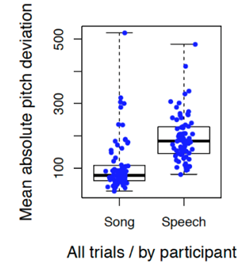

In general, people are more accurate at vocally imitating song than speech (see figure , from Pfordresher 2022). However, this difference in performance can vary by individual, task, and the measure of performance that is used.

Theoretical goals of this research include understanding the relative contributions of acoustic patterning of the stimulus, the individual background of the imitator (e.g., musical training), and the social context in which production occurs on the imitation of pitch patterns in speech and song.

Applied goals include working toward more effective use of song in rehabilitation of speech via Melodic Intonation Therapy.

Read our publications about music and language.